Google I/O 2018 – AI for everything

- Wednesday, May 9th, 2018

- Share this article:

Google I/O, the firms annual developer conference, is here once again, and in last nights keynote, the company unveiled a wealth of announcements that will be coming over the year ahead, with a focus on the power of AI and additional functions for Google Assistant, which will be integrated into a wider variety of the search giants products.

Google I/O, the firms annual developer conference, is here once again, and in last nights keynote, the company unveiled a wealth of announcements that will be coming over the year ahead, with a focus on the power of AI and additional functions for Google Assistant, which will be integrated into a wider variety of the search giants products.

Just prior to the keynote, Google revealed that it would be rebranding its Google Research division to Google AI, a clear sign that artificial intelligence would be at the front of the years agenda and a reflection of how the firms R&D division has been increasingly centred around neural networks, computer vision and natural language processing.

Google Assistant becomes a Legend

Those advances in AI will carry over into the Google Assistant, with a “continued conversation” update designed to make interacting with the digital assistant more natural and flow easier. Assistant is being integrated into Maps, enabling it to make more personalised recommendations, and is being rolled out to a wide variety of markets, with Google planning for the voice-controlled assistant to be available in 80 countries by the end of the year.

The drive towards more natural interactions on Google Assistant has also resulted in the Multiple Actions upgrade, which will enable the software to understand more complex queries that include multiple requests in a single command, like “Whats the weather like in London and Edinburgh?”. The digital assistant is also being upgraded with a feature to encourage good manners in young users, customisable routies that can be tailored to individuals or families, and seven new voices, including that of singer John Legend.

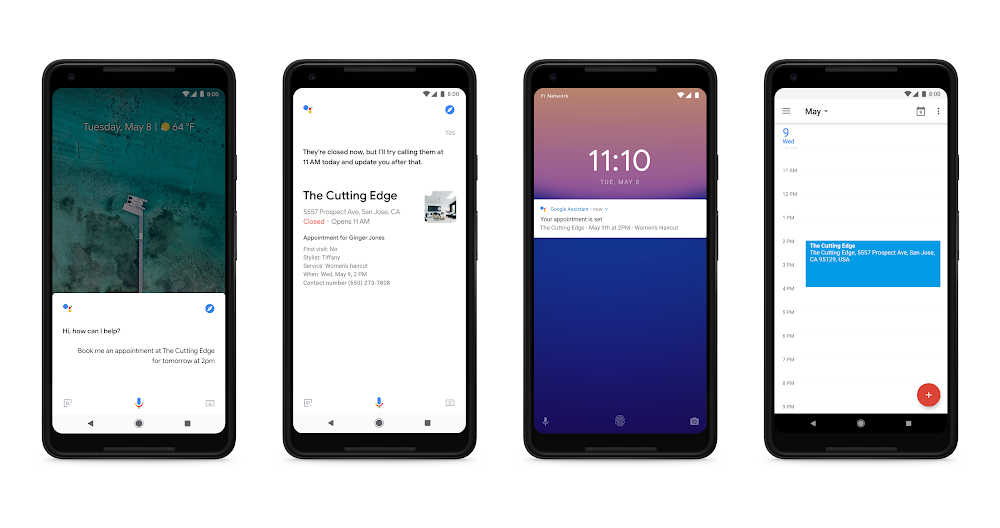

Perhaps the most exciting advance for Google Assistant is Google Duplex, which chief executive Sundar Pichai called an “experiment” designed to help the software cope with complex sentences, fast speech and long remarks. The demonstrated version was able to carry out complex tasks like booking a hair appointment, even phoning the salon and carrying out a conversation with a human that sounded far more advanced than digital assistants have in the past.

A feast for the eyes

A feast for the eyes

When it comes to Google Assistant, the firm isnt just expanding its capabilities, but also finding new ways for the software to reach consumers. Smart Displays, which were first unveiled at CES this year, will compete with Amazons Echo Show and enable users to access both Google Assistant and YouTube. The range of devices, the first of which will be available for purchase in July, can be interacted with using both voice and touch controls.

Speaking of visuals, Google Lens, which was introduced as an AI tool in Google Photos last year, is being expanded on and will now be available directly in the camera app on a variety of devices from manufacturers including LGE, Motorola, Sony, Xiaomi and Nokia, as well as the Google Pixel.

The upgraded version of Lens will also gain new capabilities, including the ability to intelligently select text from the real world, a visual search tool that can hunt for similar items, and real-time capabilities, enabling it to proactively surface information instantly and anchor it to real world objects.

The new Lens abilities are among the features powered by Googles latest work in the AR sphere, following up on the launch of ARCore in February this year. ARCores first major update was announced during the keynote, with new abilities for AR apps including collaborative experiences thanks to Cloud Anchors, improved vertical plane detection, and faster development using Sceneform.

Google Photos is also getting an AI-powered upgrade, with machine learning used to recommend actions that can be taken on photos like brightening or rotating images, or sharing them on social media. The integrated Google Assistant in Photos will also enable the app to automatically detect elements in the photo which can then be recoloured.

In order to spread these tools as widely as possible, Google has also announced a partner program for Google Photos, enabling developers to support the software within their own apps. Google hasnt announced the first wave of partners for this, but has said that apps and devices supporting this ability will be appearing in the coming months.

Mapping the future

Mapping the future

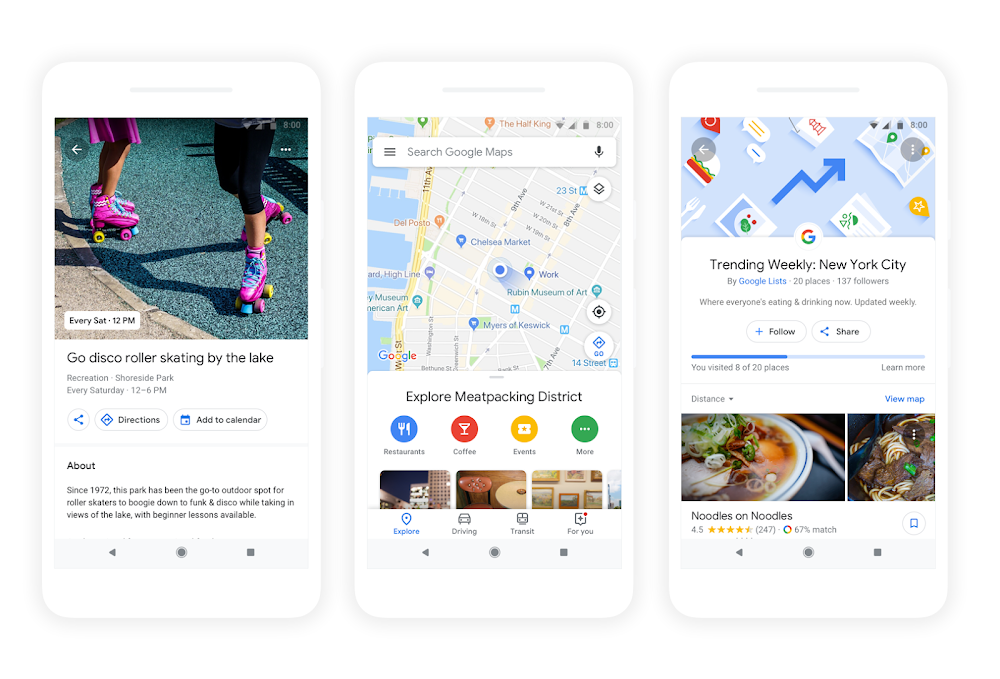

Google Maps has been a mainstay of Googles app portfolio for 10 years now, but thanks to machine learning, the software is getting a significant boost in personalisation, with the aim of making it more assistive to users looking to explore their nearby area.

A redesigned Explore tab will become a hub for recommendations, with dining, event and activity options based on the area you are looking at. Trending lists will provide more information, based on reviews from local experts and trusted publishers, and managed by Googles algorithms.

Machine learning will particularly come into play when it comes to the new match feature which appears when you tap on food or drink venues in the app. Using a variety of factors, Google Maps will judge how likely you are to enjoy the place, with recommendations and accuracy improving over time as your tastes and preferences evolve.

Other updates include easier sharing of places youre interested in visiting, and a For You tab that includes up-to-date recommendations in areas you commonly visit, ensuring you dont miss out on events and are aware of new openings.

Perhaps the most significant new tool comes from a combination of factors that weve already touched on. Google Assistants integration with Maps will enable users to combine their camera with computer vision technology, AR and Maps Street View mode. The result is like stepping inside a real-time version of Googles Street View, with the ability to do things like identify buildings as you look at them, or translate street signs as you follow directions.

Developing a new world

Google I/O is primarily an event for developers, and one of the big pieces of news for them was the release of the beta version of Android P. The latest version of Googles mobile operating system also deploys machine learning for abilities like Adaptive Brightness and Adaptive Battery, designed to optimise your power consumption, and App Actions, intended to help you move from task to task more easily.

App Actions use context to suggest different tasks, like surfacing your favourite Spotify playlist when you connect your headphones. This same kind of contextual help is present in Slices, which are designed to surface elements from an app when needed. For example, if you search for “Lyft” in Google Search, Slices can surface an interactive element that provides you with a price and time for a trip to work, and includes a button that enables you to order it then and there, without fully entering the app.

These kind of machine learning-powered innovations will be available to developers thanks to ML Kit, a new set of cross-platform APIs available through Firebase. The on-device APIs include text recognition, face detection, image labeling and more. Other improvements to the OS include improved system navigation, a redesigned Dashboard, and tools to help you limit your time spent online and wind down at the end of the day.

As with the Google I/O keynote in the past, there were almost too many announcements to cover – we havent yet touched on Googles next generation TPU 3.0 processors for improved machine learning, an update to Google News that combines AI and human curation, Smart Compose tools that will speed up email writing, and Google Pay getting support for mobile boarding passes and event tickets. However, as is the nature of this event, many of these improvements wont be reaching consumers devices for a while yet, and well be bringing you further coverage as more details emerge.